How to Read Science News and Spot Misinformation

It’s a hot year for science news. With COVID-19, wildfires, hurricanes, and new discoveries, the news and internet are blowing up with stories about the latest vaccine studies, the effectiveness of masks, impact of climate change, and speculation about life on other planets. The disasters (and successes) of 2020 have generated a lot of interest in the science behind them. Unfortunately, that also means misinformation is spreading fast.

Take, for example, early rumors on whether there was a harmful connection between ibuprofen (Advil, Motrin) and COVID-19. The stories arose from a comment at a World Health Organization press briefing that was taken out of context. The public was advised to consult their doctors about symptoms, rather than ignore them or self-medicate. At the same time, a French health minister had commented on another study that also mentioned the “dangers” of ibuprofen.

Although the actual study on blood pressure medications had found no link between ibuprofen and disease outcomes, the timing was sufficient to spur public speculation.

As a chemistry student, you already have the skills to research science that gets reported in the news and shared on social media. But do you know what red flags to look for to weigh the credibility of articles?

Read on to learn how you can exercise your science chops to quickly figure out whether a story is trustworthy.

Healthy skepticism

Your first skill dissecting the validity of a story is an analytical approach. You should be asking a lot of questions: What is this [reporter, scientist, Twitter user] trying to tell me and why? Does what they say fit what is known about science? Do the data adequately prove the claims?

In your science classes, you use a healthy skepticism to evaluate experiments, results, and reports and papers. The same skill applies outside of class.

Even experienced writers with strong science backgrounds have short deadlines and a lot of pressure to engage readers. As a result, it is easy for a writer to misunderstand an important fact, for an editor to oversell a headline, and a story to imply accuracy of an inaccurate result.

And, if a publisher has a specific agenda, creating a website, video, or even a paper that looks credible but provides bad information is all too easy.

In 2015, headlines about the fat-trimming benefits of chocolate appeared in newspapers, magazines, and websites around the world. “Why You Must Eat Chocolate Daily.” “Excellent News: Chocolate Can Help You Lose Weight.” “Pass the Easter Egg!” The stories originated from a real study with real data.

Written by a Ph.D.-level researcher and reported in a scientific journal, it had all the signs of authenticity; however, it was written by a science journalist, John Bohannon, whose Ph.D. came from studying bacteria, not humans. Bohannon, pretending to be a research director of the Institute of Diet in Health (a now-defunct website, not a scientific organization), listed himself as the first author on “Chocolate with high cocoa content as a weight-loss accelerator,” and got the study published in the open-access journal International Archives of Medicine.

Confessing his deeds in a Gizmodo article, Bohannon wrote that he wanted to know just how easy it is to “turn bad science into the big headlines behind diet fads.” Knowing the paper would never survive peer review in a reputable journal, Bohannon published in the International Archives of Medicine for a sizable fee. After the Gizmodo article appeared, the journal’s editor claimed the paper was never really published in the first place. In fact, one of the potential challenges with open-access publications is that they are often supported by submission fees, which can favor those with the means or willingness to pay the publication fee. An entire industry of “predatory journals” has arisen that publish questionable science for a fee.

Reading side to side

It is all too easy to assume a source is trustworthy based on a well-written article, exciting discovery, or catchy illustrations. After all, professional communication is also a hallmark of reputable sources. To escape the lure of a hyped-up story, the Stanford History Education Group (SHEG) recommends using a verification method called lateral reading.

Lateral reading is a term borrowed from professional fact-checkers who typically open new tabs to quickly learn what they can about the source, rather than reading from top to bottom on a web page. To verify whether the science is real, the data accurate, and the source legitimate, you research the information sources just as much as the information itself. Consider:

- Is the article from a legitimate news organization?

- Does the news organization hire trained and objective science reporters?

- What is the agenda of the organization posting the article?

- Is the source a scientist, public relations office, or business?

- Are there separate studies that have produced similar results?

Even credible sources may not be credible in all topics. For example, your cousin Veronica the astrochemist may be a great source for sharing news about the Venusian atmosphere, but she is probably less knowledgeable about whether you should go gluten-free.

Be wary, too, of sensationalized headlines and obvious conflicts of interest. A study that claims smoking cures Parkinson’s disease sponsored by the Tobacco Growers of America or a claim that CBD is the next miracle cure for arthritis distributed by Aurora Cannabis Inc. should be immediately suspicious. Although there are legitimate studies on the therapeutic benefits of nicotine and cannabidiol, there are also serious side effects, and FDA-approved use is limited to specific conditions and doses.

Mike Caufield, director of blended and network learning at Washington State University, builds on the idea of lateral reading with a process he calls SIFT: Stop, Investigate the source, Find additional coverage, and Trace claims back to the original source.

Stop means that when you see a post or news article, pause before reading and consider whether it is a reputable source, as discussed above.

Investigate the source if you aren’t familiar with it. Make sure the user profile is authentic. Check the “About Us” section of the website. Look for other stories the author/publisher/creator shares to see if there is a specific agenda at play. Check the Better Business Bureau to make sure a business or charity is legitimate.

Find additional coverage means seeing what other sources have to say about a news story. Because it is difficult to completely remove all bias from reporting, seeing the news from different points of view will help you understand it better.

Suppose you have two headlines: one that reads “Treatment X doubles survival rate for severe COVID-19 cases” and another saying “Treatment X only effective in 8% of severe COVID-19 cases.” Although each article takes a different approach to the story, both should report the same facts: Treatment X increases survival rates of severe cases from 4% to 8%.

Moreover, big stories are usually reported by many sources, so sparse coverage of an event or topic is a red flag. Likewise, true scientific breakthroughs will be commented on and tested by multiple scientists. Consider your course lab: if you determine that the pKa of the acid in your organic lab is 4.7, and your classmates get 4.6 or 4.8, the pKa is probably right. But if your classmates are getting around 3.8, or your teachers assistant says you’re working with formic acid, you’ve probably messed up.

Trace means following content back to the original source. You may find that a particular news story is years old, or that it has been reposted out of context. It could also be based on a scientific claim that’s been refuted, is out of date, or just junk.

Find the original paper that the article or post is discussing and evaluate the actual work, recommends Joseph Glajch, a Ph.D. chemist with 40 years of experience in pharmaceuticals who now owns JLG AP Consulting. “Nowadays you can go on the Internet and the good news is you can find almost anything,” he says. “The bad news is you can find almost anything.”

Glajch starts by finding the original paper in peer-reviewed journals through a search of SciFinder, PubMed, or Google Scholar. If there is no peer-reviewed study, that’s a sign the story is not credible.

Even if you aren’t an experienced medical researcher, you already know enough science to draw some initial conclusions. Ask yourself:

- What are the claims that the paper makes in the abstract and/or conclusion? (These are easily misinterpreted by writers outside of the field.)

- Are the authors of the paper experts in their fields? (A physicist would not be expected to appear on a paper about epidemiology.)

- Are the charts/graphs the same as what is being shown in the mainstream media? (Mistakes happen!)

- Is the methodology sound? (This may be harder for you to evaluate at this stage, but you can certainly tell if there is a large sample size, control groups, and reasonable data errors.)

Glajch checks citation indexes to see how often, and by whom, a paper has been cited. More citations usually mean more people have found a paper is valuable to their own research. In the case of a drug or other regulated product, he checks to see if it has been reviewed by a regulatory agency. When researching an intervention undergoing clinical trials in the United States, he checks the National Institutes of Health site ClinicalTrials.gov.

Another way to determine legitimacy is by checking if a paper is sponsored by organization sponsoring the related industry. For example, the poultry industry may sponsor research claiming health benefits of chicken.

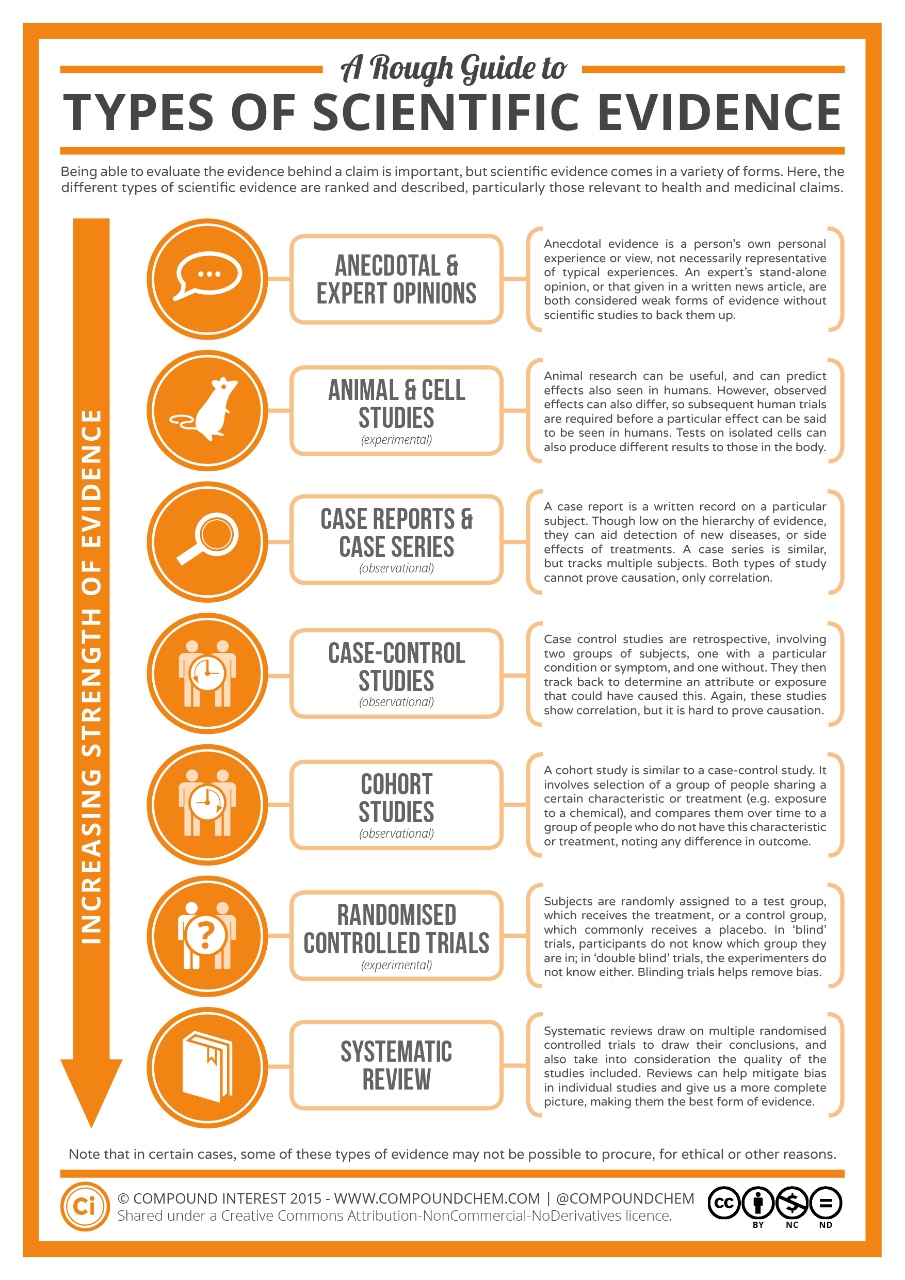

Evidence vs. “Evidence”

As you know by now, not all evidence is created equal. Anecdotal evidence and expert opinions can give you ideas on things to research, but just because your buddy felt sick after getting a flu vaccine doesn’t mean that the vaccine gives you the flu. Individual experience can’t compare to a large, randomized study with a control group. Remember Bohannon’s chocolate study? That data were real. But Bohannon took 15 subjects and reported only the data that supported his conclusion.

Percentages and multiples can be used to hide small sample sizes, as well. An article on the chocolate study could accurately say that chocolate-eating dieters lost weight 10% faster, hiding the fact that there were only a handful of dieters involved, the study was for only 3 weeks, and all dieters only lost a pound or two.

As a chemistry student, you must also consider the difference between correlation and causation. You can easily see that an increase in ice cream consumption is correlated with an increase in sunburns, but that doesn’t mean ice cream causes sunburns; it means that the increased heat and sun exposure of summer causes both.

Here’s another example of analyzing correlation and causation: Anti-vaxxers often cite the 20-30 vaccines children receive in their first two years as the cause of autism. However, autism spectrum disorder (ASD) is diagnosed by challenges with developmental milestones that most children reach between their second and third birthday. So, while an ASD diagnosis can be correlated with those vaccines, it can also be correlated with eating table foods and toilet training.

Glajch pays attention to how authors describe their experiments. “I look for a very complete description of what they did, how they did it, what instruments they used, what chemicals they used, where they sourced them from,” he says. “If you can’t put it all down on paper, you probably don’t have a very good piece of work.”

He also scrutinizes the data. “How many times did the person do the experiment? How many subjects did they look at? Don’t just look at an average value, for example. If they analyze something 10 times, what’s the average value, range, and standard deviation? That tells you a lot about how good their techniques are.”